After many years of working mainly in MS SQL Server as Database Developer, some months ago I started a new stage in my professional career. This includes working on more database technologies (Relational Databases) like MySQL and NoSQL as Cassandra, and even acquiring transversal knowledge in OS Linux. This article is the first of a series of three dedicated to relational databases and common challenges. Many of them are a common source of headaches, especially when many of us also tried to reinvent the wheel. This group of articles will show exactly the opposite and could help you to scape of being condemned to repeat the curse of the Sisyphus myth.

Standard approach for loading tables

This article is intended to give you a standard approach for loading tables — even with hundreds of millions of rows with source data such as a plain text file or CSV file. Before starting explaining the code that we can use, I would like to mention some scenarios where you may need to load tables in a faster, reliable, and easy way:

- Migrations: In a great percentage of migrations projects where the source databases as composed of different technologies the only feasible approach consists in export the data through plain text files.

- Cleaning: The company would start to use a set of tools for cleaning and validating data that later has to be imported again to target DB.

- Partial Load: This is less common than previous scenarios, but sometimes it is required to integrate new data coming from auxiliary systems and integrating into an existing table. In this case, we want to keep operative the use of target tables meanwhile we are storing new data.

How to load target tables

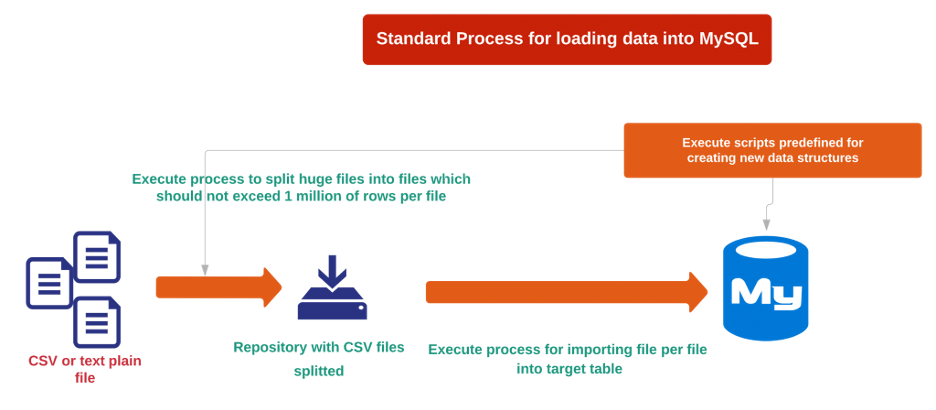

In this article, I am going to show you an approach to cover the scenarios described above. The technologies to be used basically are Linux Bash Script and MySQL. The next diagramme shows you the steps for loading target tables.

Source your CSV files and split them

The first step is taking the source CSV files and split them. You must do it only in the case where the number of rows exceeds one million. Remember, that this article is for really big data loads of a minimum of ten million to hundreds of millions of rows. Why are we aiming to split the CSV files? Because it is not feasible to try load on the fly a CSV file which contains more than 50 million rows, here another time, the quote “Divide and conquer” is true, especially because it gives you the option to split and importing the plain text files in parallel from different source servers.

Bash script, shell script and split command

Splitting a file into many fixed files is a relatively simple task through the use of Bash script. This is a powerful scripting language belong to Linux world. In this section, we want to show you practical use of some interesting shell script functionalities which play together with MySQL for solving the problem related to a massive load of data into Databases.

Using the split command is the quicker way of doing it, the next code shows you the structure of the command, and one example:

split -d -l [Number_of_Rows] [FileName] [NewName_Pattern] –additional-suffix=[File Extension]

In this case, we are going to split a file called MyFile.csv into a group of files with the pattern MyFile_ NumberOfFile. It will generate a list of files as MyFile_01.csv, MyFile_02.csv and so on. The next code will show you:

split -d -l 1000000 MyFile.csv MyFile_ –additional-suffix=.csv

Now we have a group of files split into small rows with a length of one million per file. The next stage in this process is to build a generic piece of code inside of a kind of executable file called template file. There, you can supply shell variables and build dynamic logic to consume and process the input small files. More details about it in this link.

Template code for dynamic input

The next code is a sample about the template code which help us to take dynamically the input files and processing data. You should save the code inside a file name load_mysql.tpl

SET FOREIGN_KEY_CHECKS = 0;

SET UNIQUE_CHECKS = 0;

LOAD DATA INFILE ‘/var/lib/mysql-files/csv/%FILENAME%’ IGNORE

INTO TABLE my_target_table

FIELDS TERMINATED BY ‘,’

ENCLOSED BY ‘”‘

LINES TERMINATED BY ‘\n’

(column1,column2,column3 …);

SET UNIQUE_CHECKS = 1;

SET FOREIGN_KEY_CHECKS = 1;

Inside the new bash template file, we have built a dynamic and versatile code where you have two main points. The first is the MySQL code for doing the load data into the target table. Disabling the Foreign Key is a standard recommendation which is especially important to improve the performance during the load. The use of LOAD DATA INFILE has become a good friend of mine in the last months. It’s probably the fastest way to load data from text plain files.

The second point is using dynamic logic to consume all the smaller or split CSV files. This is because you don’t want to spend the whole weekend waiting for files to load. Dynamic logic lets you automate the process which you could schedule to be executed through a cron job.

The next Bash statement allows us to get the list of files that previously we generated. This list of files will be used as input for executing a loop which should be submitted to MySQL Server. Here the example used is:

for n in `ls MyFile_*.csv`; do echo $n; sed -e ‘s/%FILENAME%/’$n’/g’ load_mysql.tpl | mysql TargetDB; done

In the first section of the previous bash statement, we initialise the loop from the load of the list MyFiles_*.csv, the next section (do echo $n) print the name of the CSV file which is processing. After that, the sed -e instructions replace the placeholder %FILENAME% with the specific csv filename loaded in every iteration. It means MyFiles_01.csv, MyFiles_02.csv , etc. The final step consists of supplying the devices_mysql.tpl input with the change inside for pointing out the specific csv filename. It is executed inside the target MySQL Database. And there you have it –every iteration executes the load into the target table.

Conclusion

Over the execution duration of this script, I observed an average load time of one minute and fifteen seconds per million of rows inserted. This number can vary in dependency of the hardware of your servers. I wasn’t able to achieve this until adopting a standard strategy which combines some of the best practices. Give it a try — it avoids the temptation of reinventing the wheel and repeating the Sisyphus myth!